Common misconceptions on the radiation hardness of integrated circuits

Space exploration was always fascinating, and recent developments have reignited the interest to the heights never seen since the last man stood on the Moon. People argue about Mars exploration and features of spaceships as their grandparents would’ve done if the internet existed fifty years ago. I’m an electronics engineer working in the aerospace industry, so I know a thing or two about the technical background of this stuff — and I see that these things aren’t common knowledge, and people often have significantly skewed ideas about the reasons behind some devices and decisions. Namely, I’d love to speak about some misconceptions related to radiation hardened integrated circuits and the means of their protection against radiation-induced damage. But, I warn you, this text will be relatively long.

Why do I write this?

The most popular theses about radiation hardness of ICs are the following:

Radiation hardened chips are not needed at all. CubeSats are just fine with chips from the nearest store, very ordinary Lenovo laptops work on the ISS without any problems, and even NASA-commissioned Orion onboard computer is based on a commercial microprocessor!

Satellites don’t need computational power, but they need these magical radiation hardened chips, so most of them use very old but extremely robust designs from the eighties, like TTL quad NAND gates.

A thesis that complements the previous one: it is impossible to achieve radiation hardness on modern process nodes. Ionizing particles just tear small transistors apart. So, the use of these TTL NAND gates is not just justified, it’s the only way to go.

It’s necessary and sufficient to use silicon on insulator (SOI) or silicon on sapphire (SOS) technology to achieve radiation hardness.

All military-grade chips are radiation hardened and all radiation hardened chips are military-grade. If you have a military-grade IC, you can safely launch it into outer space.

As one can see, these theses directly contradict each other — which makes arguing on the internet even funnier, especially if you take into account that not a single one of them is true.

Let’s start with an important disclaimer: radiation hardness is not the Holy Grail of integrated design for space and other similar environments. It’s just a bunch of checkboxes in the long requirements list, which typically includes reliability, longevity, wide temperature range, tolerance to electrostatic discharge, vibrations and many more. Everything that can compromise reliable functioning through the entire lifetime is important, and most applications requiring radiation tolerance also assume the impossibility of repair or replacement. On the other hand, if something is wrong with one of the parameters, system-level designers of the final can often find a workaround — tighten temperature requirements, use cold spares or additional protection circuitry — whatever is suitable. The same approach can be fine when dealing with radiation effects: majority voting, supply current control and reset are very common means that are often effective. But it's also often when a brand new radiation hardened IC is the only good way to meet mission requirements.

It is also useful to remember that the developers of special-purpose systems are the same people as any other developers. Just like anyone else, they normally write code filled with crutches to be ready for yesterday's deadline and want more powerful hardware to mask their sloppy job; some would’ve used Arduino if it was properly certified. And it’s also obvious that people who create requirements are rarely really concerned with any limitations and want to have the same as in commercial systems, but more reliable and radhard. Therefore, modern processes are more than welcome in radhard electronics — system designers would love to have large amounts of DRAM, multi-core processors, and the most advanced FPGAs. I have already mentioned that there could be workarounds for mediocre radiation tolerance, so the use of commercial chips is mostly limited by the lack of data on what problems are than by the problems themselves or by the commercial status of the chips.

What are radiation effects

The very concepts of "radiation hardness" and "radiation hardened IC" are enormous simplifications. There are many different sources of ionizing and non-ionizing radiation, and they affect the functioning of microelectronic devices in multiple ways. The tolerance to different sets of conditions and varying levels of exposure for different applications is not the same, so a “radiation hardened” circuit designed for low earth orbit is absolutely not obliged to work in a robot parsing debris in Chernobyl or Fukushima.

Ionizing radiation is called so because the deceleration of an incoming particle in a substance releases the energy and ionizes the substance. Each material has its own energy required for ionization and the creation of an electron-hole pair. For silicon it is 3.6 eV, for its oxide — 17 eV, for gallium arsenide — 4.8 eV. The energy release can also “shift” an atom out of the correct place in the crystal lattice (21 eV must be transferred to shift a silicon atom). Electron-hole pairs created in a substance can produce different effects in an integrated circuit. Therefore, radiation effects can be divided into the four large groups: the effects of total ionizing dose (TID), the dose rate effects, single event effects (SEE), and the non-ionizing effects called the displacement damage. This separation is somewhat arbitrary: for example, irradiation with a stream of heavy ions causes both single event effects and accumulation of a total ionizing dose.

Total ionizing dose

The total absorbed dose of radiation is measured in units called “rad”, with an indication of the substance absorbing the radiation. 1 rad = 0.01 J/kg, it’s the amount of energy released in an elementary unit of weight in a given substance. Gray, which is a 100 rad (or 1 J/kg) is another, albeit, rarer unit. It is somewhat important to understand the same amount of ionizing particles released by a source of radiation (called radiation exposure) will be translated into different levels of absorbed dose in different substances. The material of choice for silicon ICs is silicon oxide. That’s because very low hole mobility in SiO2 causes charge accumulation in oxide producing various total dose effects. Typical dose levels for commercial circuits are in the range of 5-100 krad (SiO2). The levels that are in actual demand for some practical applications start around 30 krad (SiO2) and go as far as a few Grad (SiO2), depending on the purpose of the chip. Yes, Gigarads. The lethal dose for a human is around 6 Gray.

The TID effects are mostly associated with the accumulation of positive charge in dielectrics. They manifest themselves in CMOS circuits in several main ways:

Threshold voltage shift. For n-channel transistors, the threshold is usually reduced (but the dependence may be non-monotonic, especially at high doses), while for p-channel transistors it increases. The shift magnitude correlates to gate oxide thickness and decreases with process node. In older technologies, n-MOSFET threshold shift can cause functional failure when n-channel transistors stop closing and p-channel ones stop opening. This effect is less important in submicron technologies, but it can still give a lot of headaches to analogue designers.

Leakage currents flow through parasitic channels opened by an excessive charge in isolating oxides, either from source to drain of the same device, or from one transistor to another. In the first case, a parasitic transistor controlled by the total dose is formed in parallel to the main one. The severity of this effect is highly technology-dependent as the exact shape of isolated oxide matters. Therefore, there is no direct correlation to process nodes, and there is no good way to guess which commercial device will have better or worse TID hardness.

Charge carrier mobility decreases due to scattering on accumulated defects. The influence of this factor on submicron digital circuits on silicon is small, but it is a way more important for power transistors (including GaN HEMT).

1/f noise increase caused by parasitic edge transistors. It is important for analogue and radio frequency circuits and becomes more important at lower process nodes when the influence of other TID effects gradually decreases.

A quick word on bipolars: the main TID effect there is gain decrease due to leakage-related base current increase. Another bipolar-specific effect is their (non-mandatory) rough reaction to the dose collection at low speed, so-called ELDRS (Enhanced Low Dose Rate Sensitivity). This effect complicates the testing and makes it more expensive. And the worst part is that many CMOS circuits contain a few bipolars (namely in voltage reference circuits) — and therefore can also be susceptible.

Dose rate effects

Another effect related to dose rate is when dose accumulation is so fast that such a large number of electron-hole pairs is generated that a huge excessive electric charge is overflowing every node in the chip and is causing a temporary loss of functionality and sometimes a latchup of parasitic thyristor between supply and ground. The non-functioning time is the usual measure of sensitivity to this kind of effect and it's normally seen in military standards like Mil-Std-883.

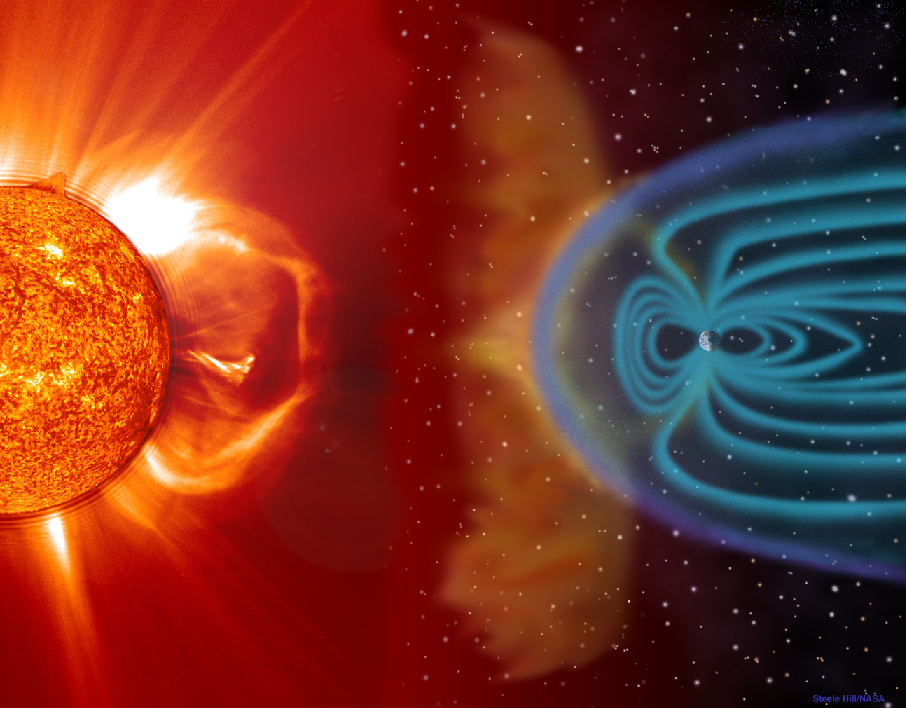

Total dose rate effects are the reason for “silicon on sapphire” (SOS) and “silicon on insulator” (SOI) technology creation and adoption: the best way to reduce the amount of charge inserted into active devices by the flow of ionizing particles is to cut their electrical connection to the enormously big substrate (and to each other). Why are these effects important? An extremely high dose rate for a short time is a typical consequence of a nuclear explosion, and military guys all around the world deeply care about this matter. Luckily for us, SOI proved to be advantageous in many other applications and therefore became widespread in normal life.

Single event effects

Single event effects (SEE) are associated with a measurable effect from the strike of a single ionizing particle. They can be divided into two large groups:

Non-destructive events include bit flips or upsets (SEU) in a variety of storage elements (cache memory cells, register files, FPGA configuration memory, etc.) and transient voltage spikes (SET) in combinational logic and in analogue circuits. The main feature of these effects is that they do not lead to the physical destruction of the chip and can be corrected by software or hardware. Moreover, single event transients are self-corrected after some arbitrarily short time. Memory upsets are the most known of these effects as they constitute a lion's share of failures due to the enormous amount of memory in modern digital ICs.

Destructive events are Single-Event latchup (SEL) effect and a variety of fortunately rarer catastrophic failures like transistor burnout of gate rupture. Their distinctive feature is that they are, well, destructive and irreversibly damage the chip if occurred. The specific case of the latchup is distinctive as the very fast power off can often (but not always!) save the chip. Circuits for supply current monitoring and cycling are fairly popular as a latchup protection measure. Other destructive effects uncommon on CMOS circuitry, but are a serious threat for some types of flash memory and for high voltage devices, including power switches.

Figure 1. Experimental data on single event effects rate. Taken from J. Barth et al., "Single event effects on commercial SRAMs and power MOSFETs: final results of the CRUX flight experiment on APEX", NSREC Radiation Effects Data Workshop, 1998

Looking at figure 1, one can see that the worst case is about one upset per two hundred days... per bit. Yes, every bit in memory is about to be affected twice per years. But when we have Megabits or Gigabytes of memory, it's always compromised, right? Yes, that's a problem, and there are techniques to address this problem, but more on that a bit later.

The specific energy yield of an ionizing particle strike is called “linear energy transfer” (LET) and is measured (MeV * cm^2)/mg. LET non-linearly and non-monotonously depends on particle energy and is also related to the path length, which can vary from hundreds of nanometers to hundreds of millimeters for relevant particles and materials. Basically, most ionizing particles just punch through an IC and fly back to outer space. Low energy particles are much more common in a real space environment (see Figure 2). Important LET values are 30 (corresponding to ions of iron) and 60/80 (which are normally considered the highest LET values to be taken into account). Another important figure is 15 MeV * cm ^ 2/(mg) — the maximum LET of products of the nuclear reaction between a silicon atom and a proton or a neutron. Protons are important as they make up a significant part of solar radiation. Whilst they have very low LET on their own, the probability of the above mentioned nuclear reaction is high enough to create a lot of events, especially in van Allen belts or during solar bursts. Protons can also interact with nuclei of heavier elements, like tungsten (used in contacts) or tantalum (popular anti-TID shielding material). Such secondary effects are the second most important reason not to pack your space-bound chips into led covers in an attempt to increase their radiation hardness. The first one is, by the way, the launch price per kilo.

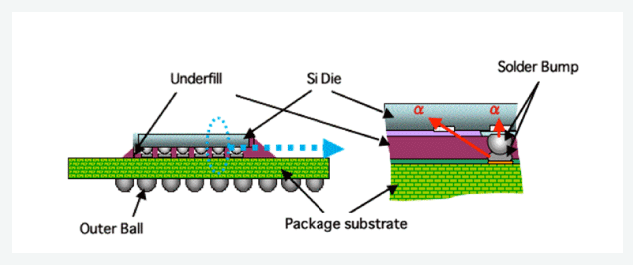

It’s also worth noting helium nuclei (alpha particles) as a source of single event effects — not because there are some in solar radiation, but because plenty of alpha sources can be found in ordinary life, like led solder and some IC packaging materials. If you have heard about low-alpha bumps and underfills — it’s about single event mitigation in “mundane” applications not related to aerospace.

Figure 2. A number of different particles spotted during the two-year mission in space. Quoted from: Xapsos et al., "Model for Cumulative Solar Heavy Ion Energy and Linear Energy Transfer Spectra", IEEE Transactions on Nuclear Science, Vol. 5, No. 6., 2007

1, 30 or 60 MeV * cm ^ 2/(mg) — how much is it? The upset threshold of a standard SRAM memory cell in the 7 nm technology is much lower than one, while for 180 nm it can vary from one to ten. The use of a special schematic allows to raise the threshold up to a hundred, but it is usually wiser to achieve 15 or 30 and to the rest via error-correcting codes. 60 MeV * cm ^ 2/(mg) can most often be found in requirements for destructive events — to ensure that the chip will highly likely survive its full intended lifespan.

Displacement damage

The displacement effects are local destruction of the crystal lattice due to an atom being "knocked out" of its intended place. The energy required for this is usually quite high, so most irradiating particles do not cause this effect. However, secondary irradiation can, and there are plenty of protons in space. These local lattice defects decrease charge carriers’ mobility, increase noise and do some other damage. Due to their very local nature, they normally do not significantly affect conventional CMOS chips — but they dominate in solar cells, photodetectors, power transistors and other devices based on compound semiconductors, such as gallium arsenide and gallium nitride. Transistors in compound semiconductors are usually not MOS, but JFET or HEMT, so they lack gate oxide. This explains their high total dose tolerance — they simply do not suffer from the effects causing the rapid degradation of CMOS chips. However, displacement effects are much more significant in these new materials, so they should be considered and weighted appropriately.

As we’re finished with the description of effects, let’s look at where and how they threaten integrated circuits.

Different orbits and other applications of radhard ICs

Figure 3. Total ionizing dose calculations, for ten years of satellite lifetime, under the shielding of 1 g/cm^2. Adapted from N. Kuznetsov, "Radiation danger on space orbits and interplanetary trajectories of satellites" (in Russian).

Figure 3 shows an example of total ionizing dose calculation for different orbits. There are multiple assumptions there — including solar activity, shape, material and thickness of protection, but you can get the idea: the dose rate can vary in five orders of magnitude at different orbits. At low orbits under the first Van Allen belt, the dose is absorbed so slowly that many out-of-the-shelf commercial chips can withstand several years in these conditions, like laptops at ISS do. Even much more fragile people can fly there for years without dramatic health consequences. Low orbits are extremely important as they encompass the entire manned astronautic, the Earth remote sensing, many present communication satellites and future internet-from-above constellations. Last but not least, almost all CubeSats are launched into low orbits.

Low orbit

Actually, the importance of low orbits is the root of multiple speculations that expensive radiation hardened chips are not needed at all, and COTS can do everything if not rejected by the overly conservative industry. Yes, COTS can do some decent job, but there are some pitfalls, even at low orbits.

The van Allen belts protect the Earth only from light particles, mainly solar electrons and protons. Heavier particles, even though they are much rarer, quietly reach even our last shield — the atmosphere — and cause single effects, including the catastrophic latchup capable of irreversibly destroying any chip at any moment. Therefore, commercial chips can be used only if they are somehow protected from the latchup, or the entire spacecraft can be lost.

Another problem is that the chips used in space are not just processors and memory, but also many other types, including power and analogue ones. Radiation tolerance of non-logic circuits is much more complex, less investigated and less predictable. Moreover, modern SoCs contain a lot of non-digital blocks like PLL, ADC, I/O circuits. For example, the most common reason for flash memory total dose failure is the high-voltage generator used for memory writing. Analogue circuits suffer from offset increase, small leakages can significantly affect the functioning of low-power analogue, power transistors are experiencing breakdown voltage degradation, and so on and so on.

It’s also important to remember that radiation sensitivity is, well, sensitive to process variations, sometimes even small ones. So, if the fab changes the temperature of some oxide growing, you can throw your radiation testing results into a trash can. Commercial vendors never guarantee that the different batches of the same product will have the same crystal and that the manufacturing process will be stable for some long time. The processor from iPhone 6, Apple A9, was produced on both 16 nm TSMC and 14 nm Samsung fabs, and the user is ineligible to know which version is inside the specific cell phone. Such an approach is unfortunately impossible for high-reliability circuits, and that’s why radhard chips are often manufactured on some kinds of Trusted Foundries or at least on automotive-intended processes, as the car industry also cares about reliability and needs stable technology.

Other orbits

However, satellites don’t fly just on low orbits. I will take a “Molniya” orbit as an example of very different requirements. This orbit is named after a Soviet satellite which was there first. “There” is a polar orbit with minimal altitude around 500 km and maximal around 40 000. The orbital period is twelve or twenty-four hour, and the satellite spends most of the time near apogee, acting as a pseudo-static object and providing communications for polar regions where geostationary satellites can’t be seen.

Figure 4. Molniya orbit with hours marked. Taken from Wikipedia.

The lifespan of the very first Molniya satellites was very short — just a handful of months. Primarily due to the degradation of solar panels powering radio transmitters. Why was the degradation so high? Perigee 500 km and apogee 40 000 km means that the satellite crosses van Allen belts twice each period — or four times per day. Van Allen belts gather and concentrate solar electrons and protons, so the environment there is among the worst one can have.

Figure 1 promises the dose rate of some tens of kilorads per ten-year lifespan on high orbits and some hundreds of thousand if the satellite is in contact with van Allen belts. That’s higher than many commercial chips can achieve, so one will need significantly thicker, heavier and more expensive shielding to use them. It may still be cheaper than buying radiation hardened ICs, but here we descend into the world of satellite creation, which is out of this article’s scope. Let’s just say that shielding is heavy and therefore expensive to launch while it doesn’t solve all problems and can even make some of them worse.

The ultimate answer to a question if COTS chips can be used in space is “Yes, but”. There are many opportunities, but also many constraints. Also, if you want to use a COTS chip in your space-related project and invest in its radiation testing, stockpile your ten-year need. By the way, it’s a credible business-model: well-known and very respected company 3DPlus tests a lot of COTS chips chooses ones that are accidentally better than others and then packs them into their own hybrid modules found everywhere in space, including Curiosity Rover on Mars.

Military-grade and radiation hardness

It’s impossible to avoid the topic of “military-grade” chips while dealing with preconceptions about radiation hardness. They are believed to be radiation hardened, but the real situation is a bit more complicated. Not all military-grade chips are radhard and not all radhard chips are military-grade. If we look into the US military standard Mil-Std-883, we will find there a lot of different environmental tests — for thermal cycling, humidity, air with sea salt, etc. etc.

Radiation is addressed in the following paragraphs:

1017.2 Neutron irradiation

1019.8 Ionizing radiation (total dose) test procedure

1020.1 Dose rate induced latchup test procedure

1021.3 Dose rate upset testing of microcircuits

1023.3 Dose rate response of linear microcircuits

Total dose? Check! Total dose rate? Check. Single events? Sorry, nothing to find here. Many specifications for military-grade radhard chips include the requirements for single event effects, but they are not part of the military standard. So, “military-grade” status does not guarantee that the chip will be capable to work in space, or at Large Hadron Collider. The best-known example of this misconception in action was the infamous Russian satellite called “Phobos-Grunt”. It was sent to Mars in 2011, but never left Earth’s orbit. The official investigation concluded that the fatal failure occurred in American military-grade SRAM chip which some poor engineer found to be suitable for space travel while it wasn’t in fact protected from single event latchup.

Recent SEE testing of 1M and 4M monolithic SRAMs at Brookhaven National Laboratories has shown an extreme sensitivity to single-event latchup (SEL). We have observed SEL at the minimum heavy-ion LET available at Brookhaven, 0.375 MeV-cm2/mg

says the report on that very chip. The report was published in 2005, but wasn’t taken into account by “Phobos-Grunt” designers, who just supposed that “military-grade” is enough to fly to the Red planet.

Mundane applications

The importance of radiation hardness is not limited to space and military applications. The atmosphere works as the final shield between the space radiation and the life on Earth, but also creates secondary particles, which are aplenty at the airliner heights (a typical transatlantic flight can see a dozen of single event upsets in the onboard computer). Some secondary particles even reach the ground and are seen in the devices with the highest memory sizes — like supercomputers. X-ray radiation is routinely used in medicine, and radiotherapy is an important way to combat malignant tumours. More and more electronics are needed in medical devices, and these areas aren't an exception.

And, of course, we should not forget that all the fuss with lead-free solder was partially caused by the fact that lead and some other materials used in IC fabrication contain impurities of heavy elements like uranium. The use of these materials cause the generation of a small, but still well-measurable flux of alpha particles — right around vulnerable silicon. In the case of BGA packages or 3D assemblies — over the entire surface of vulnerable silicon.

Luckily, alpha particles have a rather short ionization track (just a few microns, depending on energy), and multi-layer metallization helps to reduce their influence. The bad news is that at low process nodes the required energy is so small that all alpha particles, which are able to reach the surface, cause upsets. For example, TSMC published an article at the 2018 IEEE International Reliability Physics Symposium, measuring the number of alpha-related upsets in 7 nm SRAM. So, the problem still exists in a largely lead-free world.

Figure 5. Solder bumps as the alpha radiation source. Image courtesy of Mitsubishi Materials.

I also want to say a few words on yet another application of radiation hardened chips: high energy physics and nuclear industry. Hadron colliders and nuclear power plants require extremely robust electronics capable of working in contaminated active zones for many years. The same would be the case for robots designed to deal with nuclear-related catastrophes like Chernobyl or Fukushima. TID requirements for these circumstances could be dozens or even hundreds of Megarads (Si), which is three orders of magnitude more than in conventional space applications. The problem is further complicated by the fact that such durability is required not just from digital ICs, but also from power management and analog chips, which could be found in multichannel telemetry systems and servo motor drives. These chips could be much more vulnerable than digital ICs in terms of their reaction to transistor degradation. TID behaviour and hardening of digital circuits is well-investigated and well understood, but for analog circuits, it’s much more interesting as every case and every circuit may require an individual approach rather than a semi-automated application of known methods. The electric circuit is often guarded know-how in analog design, and it’s more true for radhard analog.

Figure 6. Normal and radiation hardened bandgap voltage reference. Taken from Y. Cao et al., «A 4.5 MGy TID-Tolerant CMOS Bandgap Reference Circuit Using a Dynamic Base Leakage Compensation Technique», IEEE Transactions on Nuclear Science, Vol.60, No.4, 2013

Let's look at a good (and rare) example of such a task. Bandgap reference voltage source is a simple and well-known circuit that could be found in any analog IC. This circuit normally contains a pair of bipolar transistors controlled by an operational amplifier. These bipolars show significant leakage under irradiation, and this leakage leads to significant output voltage changes, sometimes 10-20% under high doses, which corresponds to the effective ADC resolution of two to three bits. The circuit at the right shows reference voltage variation within 1% (which gives us more than 7 bits) under the total dose of 4.5 MGy. As you may see, it wasn’t easy to achieve this outstanding result: local feedbacks are scattered everywhere, subtracting base current from the equation and therefore getting rid of leakage current too. This radhard version contains four times more transistors and has two times more power consumption than its conventional analog. The worst is that, as I’ve said, every circuit normally requires an individual approach, making analog radhard designer's work very challenging. And there’s also a single event effects problem, solution for which is as well badly formalized and very circuit-dependent.

Radiation hardness and process node

The website of one established microelectronics fab with old links to the aerospace industry for a long time contained a statement that radiation tolerance could not be achieved at process nodes below 600 nm, as “charged particles pierce silicon and destroy transistors”. Surprisingly, but likely unrelated, the minimal available process node for that fab, whose high-ranking official said in the interview that it’s “technologically impossible” to create radiation hardened ICs at nodes lower than 90 nm. You may guess what was the minimal node at that fab. I was quite surprised to read that interview as I was working on a radhard 65 nm chip at that moment. I can understand some marketing nonsense, but such words are dangerous in the long term, especially when said to the wide audience or to the audience of decision-making persons.

I also regularly see the reasoning that ICs built on coarse process nodes are SEL-immune due to very high energy required to influence transistors, so the long-time use of proven technology is not just justified, but simply necessary. Or vice versa, sub-something process nodes work with very low supply voltages — too low to exhibit SEL as parasitic thyristor simply can’t open. Or there are opinions that the problem is not in process nodes, it’s CMOS technology that is fundamentally weak (as evidenced by some tests done by the applicant in early seventies), while in good old time radhard ICs were bipolar/SOI/GaAs. So, since CMOS technology is fundamentally flawed, there is no other way than to continue using ancient tech for spaceships. Preferably, electronic lamps.

"Radhard" equals "old"?

For the sake of justice, some old ICs built on multi-micron process nodes are really insensitive to single events. But “some” doesn’t mean “all”, and all kinds of problems were documented through the history of space exploration. Large process nodes understandably require a lot of energy from ionizing particle fo flip a bit — but they also require the same amount of energy at each switching during normal operation, so I wish a lot of luck to anyone willing to build an Intel Core processor equivalent out of 74-series logic and I would love to see a rocket that would be able to lift such a monster into the air.

On the other hand, microelectronics is not limited to microprocessors and memory. There is a huge variety of tasks where latest process nodes are not necessary, unprofitable or simply unsuitable. The global market for IC built on 200 mm wafers (process node 90 nm and above) has been growing for several years, up to a periodic shortage of production equipment. “Outdated” fabs produce both old and new designs, and many manufacturing companies are commercially successful despite not being on par with TSMC and Samsung. So, take all process node fuss with a grain of salt when data processing isn’t the topic.

Other factors inciting the use of older process nodes in aerospace are a longer life cycle of such products, expensive certification and small production quantities. The design of a simple 180 nm IC could cost a few million Euro, and when these millions plus few more millions required for certification and testing are divided by a thousand ICs, each of these ICs becomes very expensive. And what if we need to recoup a few hundred millions for a 7-5 nm design? These troubles lead to two things. First, the design of most radiation hardened ICs in the world is government-subsidized. Second, successful designs are manufactured as long as it’s possible, and IPs from them are reused and reused and reused to lower costs, forcing the manufacturer to stay at the proved process node. These factors combined could create an illusion that most radhard ICs are outdated. The clients also support proven projects, or, to be more precise, flight-proven projects. If a chip has a heritage in space, it’s a colossal competitive advantage, and you may be sure that this advantage is exploited as long as possible, even when the design itself becomes outdated.

The public image of radhard ICs is then further diminished by the fact that the most famous of them are used in long-term scientific missions. In 2015, I’ve seen a lot of news like “the New Horizons has the same CPU as 20-year-old original Sony Playstation”. Well said, well said. The New Horizons was launched in 2006, its development had begun in 2000 — it was the year of the first flight of the processor they used. Mongoose-V processor shares the MIPS ISA with PlayStation's MIPS R3000, but it’s entirely different chip released in 1998, some eight years before the launch of the New Horizons and seventeen years before it was featured in the news. Here is another example: Power750 processors came out for commercial applications in 1997, particularly for iMac computers. Their radhard counterpart, RAD750, was released in 2001 and flown into space in 2005, four years later. It was the highest computational power available for the Curiosity Mars Rover, so there was a lot of news about an ancient processor on Mars later in 2012. And, to make it even funnier, almost the entire Curiosity design was reused for the Perseverance, which is due to produce more stupid processor-related news headlines next year.

"Radhard" equals "new"?

Despite all of the above, the newest radhard ICs of today are designed at the nodes between 45 and 20 nm, like fresh radhard Xilinx Kintex FPGAs. American RAD5500 series is manufactured at 45 nm, European DAHLIA, which is due in 2021, uses 28 nm, and so on. GlobalFoundries already offers a 12 nm process for aerospace applications, so the modern radhard ICs are definitely modern.

There are many topics to be researched and there is no shortage of scientific articles on the topic of radiation hardness of modern technologies as new challenges tend to emerge with each new generation. Process node shrinking definitely affects radiation hardness, but this effect is complex and not necessarily negative. The general trend is that TID influence decreases while the role of single events becomes more important. Thinner gate oxides lead to smaller threshold voltage shifts, but then these gate oxides are not silicon oxide anymore, and their interface with silicon is different, and so on and so on.

Figure 7. Two versions of radiation hardened inverter. Taken from Vaz et al., "Design Flow Methodology for Radiation Hardened by Design CMOS Enclosed-Layout-Transistor-Based Standard-Cell Library", Journal of Electronic Testing, volume 34, 2018

Figure 7 shows two implementations of an inverter. On the right, we see a complete stuffing — enclosed layout transistors to combat total dose and individual guard rings against Inter-transistor leakages and SEL. A simpler design for lower total dose requirements is shown at the left: transistors are linear. It’s worth noting that total dose tolerance of 50-100 krad(Si) is quite sufficient for many space applications, and normal linear transistors do an excellent job there while saving area, not suffering from aspect ratio limitation and having better matching than ELTs. Also note that only nMOSFETs suffer from source-drain leakage and only they have to have enclosed gates if high total dose tolerance is needed, but pMOSFETs are often drawn as ELTs too for easier size balancing between nMOS and pMOS.

Single events’ relationship with process nodes is more interesting. Approximate diameter of the charge collection area of an ionizing particle hit is around one micron — which is much bigger than the size of memory cells in deep submicron process nodes. And indeed, experiments show multiple bit upsets from a single ion strike.

Figure 8. Multiple bit upsets in two different 6T SRAM cell arrays. Taken from M. Gorbunov et al., "Design of 65 nm CMOS SRAM for Space Applications: A Comparative Study", IEEE Transactions on Nuclear Science, Vol.61, No.4, 2014

Figure 8 shows the experimental data on single-event upsets in 65 nm bulk technology. On the left — normal commercial 6T-SRAM design. Ten upsets from the single hit! Hamming code won’t protect you from such disaster. So, when we’re talking about commercial ICs, coarse process nodes are somewhat better than smaller ones, as they will mostly experience easier to correct single-bit upsets. But when we’re designing a radhard chip form the scratch, there are a plethora of architectural, schematic and layout solutions capable to produce both high single event tolerance and high performance. The right side of figure 8 also shows the results from 6T-SRAM, from the same die, with the same schematic, but with a different layout. The price of getting rid of most multiple bit upsets, latchup and for increasing total dose hardness is very simple: four times area increase. Doesn’t sound nice, but no one said it would be easy. However, if you’re ready for compromises, Radiation Hardening by Design allows achieving any predetermined level of radiation hardness at any bulk technology.

Why predetermined? Because different requirements could be satisfied with different means. But why not apply all of them at once and be fine for every possible application? Most radiation hardening methods normally come at the cost of compromising functional parameters to some extent (supply current, area, speed, etc.). Therefore overengineering will lead to non-competitive products. Sure, such low-volume ICs are rarely made for just one application and should be flexible, but detailed and reasonable radiation requirements are absolutely vital for the successful design.

Silicon on Insulator (SOI)

The eye of the attentive reader could’ve caught the word “bulk” in the phrase “predetermined level of radiation hardness at any bulk technology”. Isn’t it superfluous there? Isn’t it even wrong? It’s widely supposed that all the best radhard ICs are fabricated using “silicon on insulator” or “silicon on sapphire” technology. Right?

The “silicon on insulator” technology has long been firmly entrenched with “inherently radiation-hard” fame. The roots of this popular fallacy go back into antiquity, when its predecessor, SOS (silicon on sapphire) was actively used for military designs. Why? Transistors in SOS/SOI are electrically separated from each other and from the substrate. This means much lower radiation-induced charge collection volume, which is quite handy for dealing with high dose rate events as it significantly reduces the chip shutdown time right after the nearby nuclear explosion — indeed an important trait for a product designed during the Cold War.

Another part of the “SOI = Radhard” myth is insensitivity to latchup, including dose rate latchup. Latchup (also known as “thyristor effect”) is one of the main headaches for spaceborne systems’ designers as it’s unpredictable and catastrophic. So the technology allowing to deal with it for free could be naturally considered a heavens’ gift. But the whole picture is a little bit more complicated.

Figure 9. CMOS technology cross-section with parts of parasitic thyristor causing the latchup.

The cause of the latchup effect is the parasitic thyristor structure present in bulk CMOS technology. If the resistances Rs and Rw are large enough, a hit of an ionizing particle can deposit enough charge to open parasitic thyristor and create a short between supply and ground. How big are these resistances in real chips? The answer is quite simple: contact to substrate or well means an extra area, so their number is usually minimized to make chips cheaper. This means that a random commercial IC is more likely to be vulnerable to latchup than not. Latchup, however, can occur not just after an ion strike, but also due to ESD, high temperature, excessive current density or a door being shut in the nearby room, so automotive and industrial IC designers are familiar with the topic and more likely to take measures against it.

A chip can be driven off of the latchup condition by supply reboot, and such a reboot is quite acceptable in many space applications, so many commercial products can still be used in space — even if with some caution. So-called latchup current limiters are very popular in radhard systems, especially in ones requiring high computational performance impossible with up-to-date radhard processors. But such a solution has many limitations. Power reset is not always possible as there is no shortage in real-time calculations. The reboot during an important manoeuvre can put an end to a long mission. The current consumption of a modern IC may vary in a few orders of magnitude according to its working mode, so current consumption in the “nothing happens and there is a latch” state may be less than in high-performance normal condition. Where to set the current limit for such a chip? The required system reaction time also depends on a protected chip as some of them are very vulnerable and others can sustain thousands of latchups if they are being reset sufficiently fast.

If a chip is fabricated on SOI technology, all these problems are not a concern anymore. And no protection circuitry is necessary — completely nothing. That’s why commercial SOI chips are so attractive for space applications. For example, the new American spacecraft Orion is controlled by a commercial SOI-based microprocessor PowerPC 750 rather than its radhard version RAD 750.

Figure 10. Leakage paths in bulk CMOS technology. Taken from J. Schwank et al., «Radiation effects in MOS oxides», IEEE Transactions on Nuclear Science, Vol. 55, No. 4, 2008

Then what’s the problem? There is not just a latchup, but also other radiation effects, and SOI is not inherently better than bulk technology in terms of both TID and SEE hardness. Figure 10 shows two leakage paths in bulk CMOS technology. Both of these paths are easily closed with proper layout design — one using ring n-channel transistors, the second — with the help of guard rings. These solutions have drawbacks from the point of view of the functioning of the circuit (restrictions on the minimum size of the ring transistor, area loss when using guard rings), but from the point of view of ensuring radiation resistance, they are very effective.

Figure 11. SOI buried oxide leakage path. Taken from J. Schwank et al., "Radiation effects in MOS oxides", IEEE Transactions on Nuclear Science, Vol. 55, N.4, 2008

In SOI technology, there is another leakage path from the source to the drain along the boundary of silicon and latent oxide. Hidden oxide is much thicker than the gate, which means that it can accumulate a lot of positive charge. If we consider the "lower" transistor (the right part of Figure 11), for which the hidden oxide is a gate, we will see that in a normal situation, the source-gate voltage of this transistor is zero and its threshold voltage is several tens of volts, i.e. the current through this transistor does not flow. When irradiated, a positive charge is accumulated in the hidden oxide (this process is influenced by the geometry of the main transistor, in particular, by the thickness of the silicon instrument layer), and the threshold voltage of the “lower” n-channel transistor drops. As soon as it falls below zero, the current begins to flow freely through the transistor along the uncontrolled bottom channel. Thus, from the point of view of the total absorbed dose, the SOI technology is fundamentally strictly worse than the volumetric technology. But maybe there is a way to fix the situation somehow?

The substrate is usually grounded (in fact, connected to the lowest available potential), but in SOI nothing prevents us from setting negative voltage there and closing that parasitic back gate. This idea is, in fact, actively used — and in FDSOI technologies active back gate control is even used in their normal operation to minimize leakages in low-power modes and maximize speed when necessary. However, there is a catch: when we apply a high electric field to the buried oxide, we don’t just close the back transistor, but also accelerate the accumulation of positive charge. As a result, depending on technology specifics and the magnitude of the voltage applied, it’s possible that the total dose hardness will become even worse! There are other details, but in general, it’s possible to achieve almost any TID hardness level using standard CMOS technology, but there are some fundamental limitations for SOI. These limitations are normally negligible for low-orbit space applications, but if we’re speaking about multi-Megarad levels that could be present in the nuclear industry, commercially unfeasible technology changes are necessary for SOI.

Single event upsets in SOI are no less interesting. On the one hand, the charge collection volume in SOI is much smaller (although there is a long-lasting argument about the exact shape of this volume and its possible connection to the bulk). This means that we get less excessive charge and can dissipate it through supply lines faster, increasing chances of logic masking in cases on non-memory cells being hit.

On the other hand, this small area has small capacitance, so even a small deposited charge can raise the voltage and open a parasitic bipolar transistor consisting of source, body and drain. If it happens, the deposited charge is multiplied by the gain of this parasitic transistor. In practice, this means threshold LET drop to levels below 1 MeV * cm ^ 2/(mg), and then effectively any incoming particle will cause a bit upset.

This negative effect, of course, could be mitigated by the careful low-ohmic connection between the transistor body and a respective power bus (or, in some cases, transistor source). But no one does this in commercial chips as these connections take a lot of areas and do nothing in exchange. Even in a radhard chip losing some area in each transistor can be a significant downside compared to bulk alternatives where one contact per 4-8 memory cells is often sufficient to prevent both latchup and parasitic bipolar multiplication. Even some guard rings can be set up with smaller area loss.

SOI gets another important advantage at small process nodes where dielectric isolation helps prevent multiple bit upsets from a single particle, but modern cells are so small that a single ion track can directly affect two of them. However, it’s still much better than 10-bit upsets seen in experiments with bulk technology.

Summing things up, SOI is not “inherently radiation hardened”, but it has some significant advantages and disadvantages compared to traditional bulk technology. The advantages could be exploited for a great effect, while disadvantages should be mitigated with a proper design. But the same is also true for bulk technology, so the proper process choice is not as trivial as it may seem and should be taken seriously in every single project. One should deeply understand the application to achieve desired levels of radiation hardness without making the chip unnecessarily complicated and too expensive.

That's all, folks!

Many engineers across the globe are working on the topic of radiation hardness, and it’s completely impossible to cover everything in one article, especially if it’s dedicated to a wider audience. So, my colleagues will probably find enough oversimplifications or even mistakes, which I will be happy to discuss and correct. While not trying to be exhaustive, I hope that I gave my readers a brief understanding of what radiation hardening of electronic circuits is and that I was able to dispel some related misconceptions. Microelectronics in general and its special applications are one of the fastest evolving fields of applied science, so common knowledge becomes outdated very fast, while simple recipes are not used just because they don’t exist anymore.